How much of the stunning responses provided by OpenAI’s new chatbot can be believed? Let’s look into ChatGPT’s negative side. A lot of people have noted that ChatGPT has some significant flaws despite being a potent AI chatbot that is fast to impress. The issues with ChatGPT are even more crucial to comprehend given that there are no signs of AI progress slowing down. Here are some of the key problems that ChatGPT is expected to solve for our future.

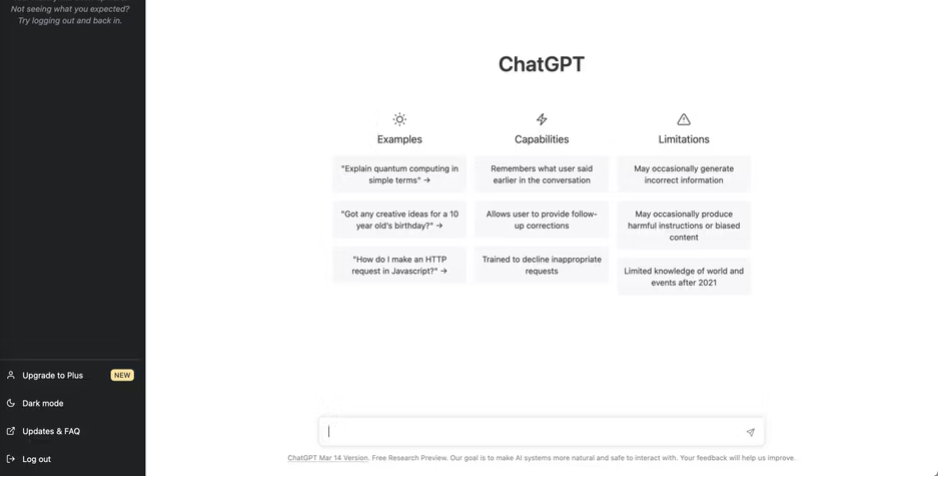

What Is ChatGPT?

ChatGPT is a sizable language model created to mimic real human speech. You can communicate to ChatGPT, and it will remember what you’ve said in the past and be able to correct itself when necessary, much like conversing with a human.It was taught using a variety of internet text, including Wikipedia, blog postings, books, and scholarly publications. It can recall details about our current world and recollect historical data from our past in addition to replying to you in a human-like manner.It’s easy to learn how to use ChatGPT, but it’s also simple to believe that the AI system works without any problems. However, since its release, significant issues related to privacy, security, and its broader effects on people’s lives—from jobs to education—have come to light.

Security Threats and Privacy Concerns

There are many topics that, for good reason, you should not divulge to AI chatbots. There is a danger involved when writing about personal or private information from your place of employment. Your chat history is stored on OpenAI’s servers, and a small number of third-party organisations may have access to this information.

Additionally, placing your data in OpenAI’s care has turned out to be problematic. A security flaw on ChatGPT in March 2023 caused some users to see chat heads in the sidebar that didn’t belong to them. Any tech business would be concerned if customers’ conversation logs were accidentally shared, but it would be particularly awful given how many people use the well-liked chatbot.Reuters stated that ChatGPT had 100 million active users just in January 2023. Italy banned ChatGPT and requested it stop processing Italian users’ data even though the fault that triggered the breach was swiftly fixed.

The oversight group believed that European privacy laws were being broken. It looked into the situation and made various requests on OpenAI in order to get the chatbot working again.

OpenAI eventually reached an agreement with the regulators after making a number of substantial adjustments. The app can now only be used by those who are 18 or older, or by those who are 13 or older with parental consent. Additionally, it increased the visibility of its Privacy Policy and offered a Google form for customers to utilise to either completely remove their ChatGPT history or opt out of having their data used for training.

Although these modifications are a terrific beginning, they have to be made available to all ChatGPT users.You might not believe that you would divulge your personal information so readily, but everyone has the potential to say the wrong thing. A prime example of this was when a Samsung employee gave ChatGPT access to business data.

Concerns Over ChatGPT Training and Privacy Issues

After ChatGPT’s wildly successful debut, several have questioned how OpenAI developed its model in the first place.Following a data breach, OpenAI revised its privacy policy, but it might not be enough to satisfy the General Data Protection Regulation (GDPR), the data protection regulation that applies to all of Europe. As reported by TechCrunch

When OpenAI originally trained ChatGPT, it probably collected personal data. European data rules protect personal data, whether it is posted publicly or privately, whereas American laws are less clear.Artists who claim they never agreed to having their work used to train an AI model have similar objections to the usage of ChatGPT training data. In the meantime, Stability.AI has been sued by Getty photographs for utilising protected photographs to train its AI models.

The lack of openness makes it difficult to establish whether OpenAI acted legally until it exposes its training data. The specifics of how ChatGPT is taught, the data that was utilised, the source of the data, and the architecture of the system are all unknown to us.

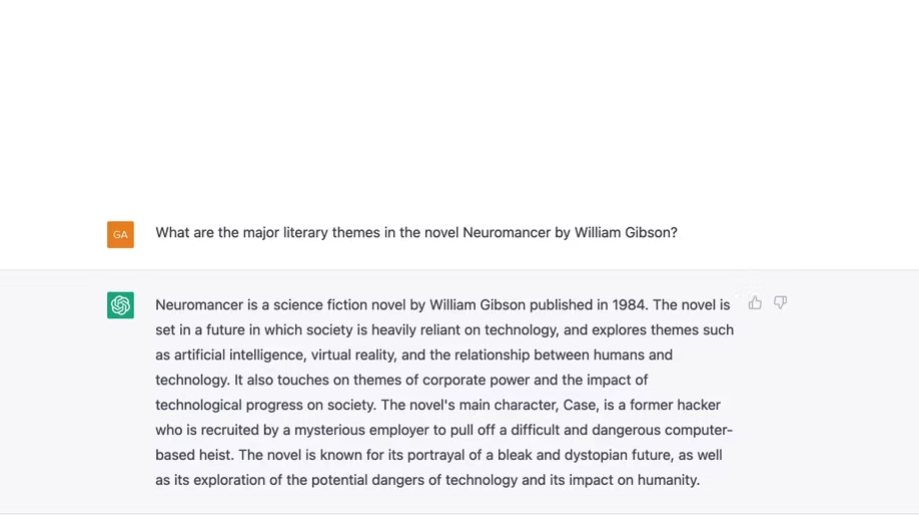

ChatGPT Generates Wrong Answers

It struggles with fundamental maths, seems unable to comprehend straightforward logic, and will even present facts that are wholly untrue in its defence. As users on social media can confirm, ChatGPT can make mistakes repeatedly.

This shortcoming is acknowledged by OpenAI, which states that “ChatGPT occasionally writes plausible-sounding but incorrect or nonsensical answers.” It has been said that this “hallucination” of reality and fiction is particularly risky when it comes to matters like giving sound medical advice or accurately describing significant historical events.Unlike other AI assistants like Siri or Alexa you may be familiar with, ChatGPT initially avoided using the internet to find solutions. Instead, it built an answer word by word, using its training to determine which “token” would be most likely to occur next. In other words, ChatGPT determines an answer through a succession of educated guesses, which explains in part how it can present false answers as 100% accurate.

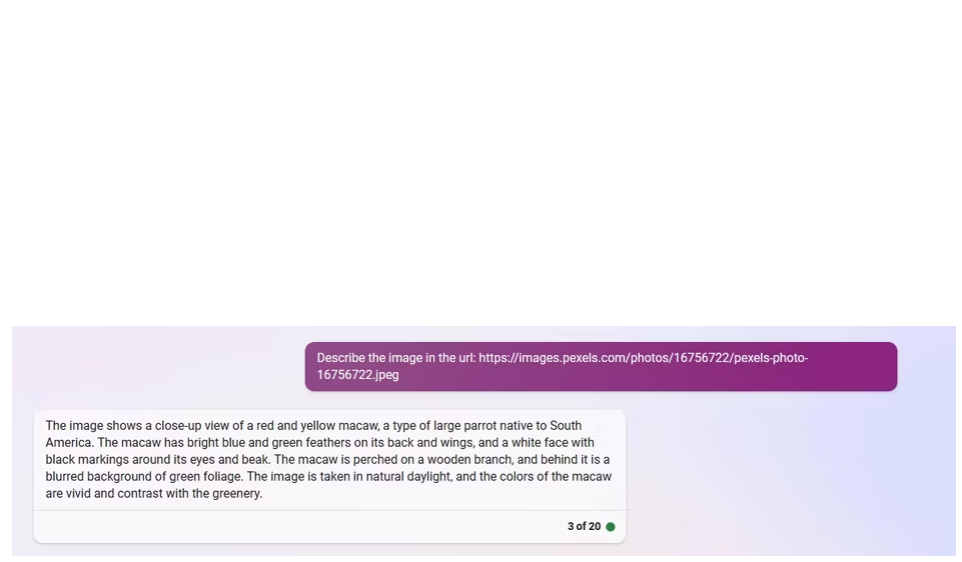

ChatGPT was connected to the internet in March 2023 but was immediately cut off again. There wasn’t much detail provided by OpenAI other than the statement that “ChatGPT Browse beta can occasionally display content in ways we don’t want.”The Bing-AI tool from Microsoft, which served as the foundation for the Search with Bing feature, has similarly shown that it is not yet able to provide accurate answers to your queries. It ought to acknowledge that it is unable to fulfil the request when asked to describe the picture in a URL. Instead, Bing described a red and yellow macaw in great detail; the URL, however, displayed a picture of a man sitting.

More amusing delusions can be seen in our comparison of ChatGPT, Microsoft Bing AI, and Google Bard. It is not difficult to picture individuals using ChatGPT to obtain quick facts and information and believing the findings to be accurate. However, ChatGPT hasn’t managed to get it right up to this point, and collaborating with the equally erroneous Bing search engine made matters worse.

ChatGPT Has Bias Baked Into Its System

The collective writing of people from the past and present served as the basis for ChatGPT’s training. Unfortunately, this implies that the model is susceptible to the same biases that exist in reality.

The company is working to reduce the discriminatory responses that ChatGPT has been shown to generate against women, people of colour, and other marginalised groups.

Blaming humanity for the prejudices ingrained in the internet and beyond, one explanation for this problem focuses on the data as the issue. But OpenAI, whose researchers and engineers choose the data used to train ChatGPT, has some of the blame as well.

Once more, OpenAI is aware of the problem and has stated that it is tackling “biassed behaviour” by gathering user feedback and encouraging users to identify ChatGPT outputs that are subpar, disrespectful, or just plain wrong.

You may make the case that ChatGPT shouldn’t have been made available to the general public until these issues were investigated and fixed since they could endanger individuals. But OpenAI may have ignored prudence in the drive to be the first business to develop the most potent AI model.In contrast, Google’s parent company, Alphabet, debuted Sparrow, a comparable AI chatbot, in September 2022. But for similar safety reasons, it was maintained on purpose behind closed doors. with the same period, Facebook unveiled Galactica, an AI language model designed to aid with academic study. It was swiftly returned, nevertheless, after being widely criticised for producing inaccurate and biassed research-related results.

ChatGPT Might Take Jobs From Humans

After ChatGPT’s quick development and rollout, the dust hasn’t yet settled, but that hasn’t stopped the underlying technology from being integrated into a number of for-profit applications. Duolingo and Khan Academy are two applications that incorporate GPT-4.

The latter is a multifaceted educational learning tool, whereas the former is a language study software. Both offer what amounts to an AI tutor, either in the form of a character powered by AI that you may communicate with in the language you are learning. alternatively, as an AI tutor who may provide you with personalised feedback on your learning.This might only be the start of AI taking over human professions. The professions most vulnerable to AI include accountancy, writing, and graphic design. It became even more likely that AI will alter the workforce soon after it was revealed that a newer version of ChatGPT had passed the bar test, the last requirement to become a lawyer.

The disruption that AI is causing to some sectors just six months after ChatGPT’s inception is highlighted by the fact that Education businesses posted enormous losses on the London and New York stock exchanges.

Jobs have always been lost as a result of technological improvement, but because of how quickly AI is developing, many different industries are experiencing rapid change. AI is already present in the workplace and is influencing a wide range of human occupations. Menial work may be carried out in some jobs with the aid of AI tools, while other jobs may become extinct in the future.

ChatGPT Is Challenging Education

You can ask ChatGPT to edit your writing or provide feedback on how to make a paragraph stronger. Alternatively, you can completely cut yourself out of the picture by asking ChatGPT to handle all of the writing.

When English assignments were fed to ChatGPT, teachers tried it out and found that the results were often superior to what many of their students could produce. ChatGPT is capable of doing everything without hesitation, from creating cover letters to summarising the main concepts of a well-known work of literature.

That begs the question, will students still need to learn how to write if ChatGPT can do it for us? Although it may seem like an existential question, instructors will soon have to confront the truth because students have begun using ChatGPT to assist them with their essay writing.

Students are already dabbling with AI, which is not surprising. Early surveys, according to The Stanford Daily, indicate that a sizable portion of students have utilised AI to help them with their homework and examinations.

Schools and institutions are currently changing their rules and deciding whether or not students can utilise AI to assist them with an assignment. The danger extends beyond just English-based courses; ChatGPT can assist with any assignment requiring brainstorming, summarising, or reaching analytical conclusions.

ChatGPT Can Cause Real-World Harm

It didn’t take long for someone to attempt to jailbreak ChatGPT, leading to the creation of an AI model that could get through OpenAI’s protections aimed to stop it from producing offensive and harmful text.

Their unlimited AI model was given the name Dan by a group of members on the ChatGPT Reddit community, which stands for “Do Anything Now.” Sadly, freedom to do as you choose has led to an increase in internet scams by hackers. Hackers have also been found marketing rule-free ChatGPT services that generate phishing emails and malware, with varying degrees of success with the AI-created malware.With AI-generated language, it is far more challenging to try to recognise a phishing email intended to obtain sensitive information from you. Grammar mistakes, which used to be a clear warning sign, are less common with ChatGPT because it can compose all types of writing with ease, including essays, poems, and obviously shady emails.

A website devoted to offering accurate responses to common inquiries, Stack Exchange, has already experienced issues as a result of the speed at which ChatGPT can produce information. As soon as ChatGPT was made available, users inundated the website with the responses they requested it to provide.

It would be impossible to maintain a good standard of replies if there weren’t enough human volunteers to go through the backlog. Not to mention that many of the responses were wrong. A prohibition was put in place on all responses produced by ChatGPT in order to prevent damage to the website.Another major worry is the proliferation of false information. Everything on the internet is suspect because of the volume at which ChatGPT can produce text and its capacity to make even false material appear to be true. Deepfake technology’s risks are amplified by this crucial combination.

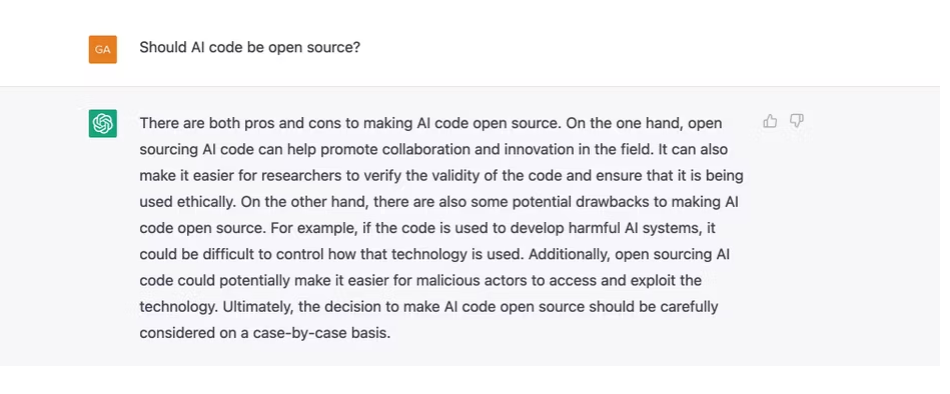

OpenAI Holds All the Power

OpenAI bears a fair share of the responsibility that comes with enormous power. With not just one but several generative AI models, such as Dall-E 2, GPT-3, and GPT-4, it is one of the first AI businesses to actually shake up the world.

As a privately held corporation, OpenAI has control over both the data used to train ChatGPT and the pace at which it releases new features. Although experts have expressed concern about the risks posed by AI, OpenAI isn’t showing any signs of stopping down.

Contrarily, the success of ChatGPT has sparked a competition among major tech firms to introduce the next major AI model. Among them are Google’s Bard and Microsoft’s Bing AI. A letter was written by IT leaders from all over the world requesting that development be halted because of concern that rapid development will result in major safety issues.

Although OpenAI places a strong premium on safety, there is still a lot we don’t know about how the models really function, for better or worse. The only option left to us is to blindly believe that OpenAI will ethically investigate, create, and use ChatGPT.Regardless of our opinions of its techniques, it’s important to keep in mind that OpenAI is a private firm that will continue to develop ChatGPT in accordance with its own objectives and moral standards.

Tackling AI’s Biggest Problems

With ChatGPT, there is a lot to be enthusiastic about, but there are some significant issues that go beyond its practical applications.

OpenAI acknowledges that ChatGPT can provide inaccurate and biassed results, but aims to address the issue by obtaining user feedback. However, malicious actors might readily take advantage of its capacity to generate compelling writing even when the facts are false.

The vulnerability of OpenAI’s infrastructure has already been demonstrated through privacy and security breaches, which puts users’ private information at danger. People are jailbreaking ChatGPT and exploiting the unrestricted version to create malware and frauds on a scale we haven’t seen before, which is aggravating the problem.There are a few other issues that are mounting, including threats to jobs and the potential to disrupt education. It can be challenging to anticipate future issues with cutting-edge technology, but sadly, we don’t need to go very far. We currently have to deal with a fair number of difficulties that ChatGPT has produced.